New o1 model can solve complex tasks due to a new processing step before answering.

See full article...

See full article...

The VCs don't believe the hype. They're looking at revenues and exit prices and the AGI stuff is irrelevant to that. If you can automate even 10% of commercial writing you're basically going to mint money, which means a hockey-stick revenue line that gets you the IPO in 2 years.Yeah if they just said "hey this is good for summarization and brainstorming, and certain professions like coders and lawyers/paralegals will see efficiency gains" I'd be far less of an jerk about their janky tech.

WE ARE SOVING GENERAL INTELLIGENCE is total bullshit, but thats what gets you the 150 Billion valuation. Being honest gets you thrown out of the VC office.

Is there any evidence that such a workflow is superior to a human only workflow in any way by any amount?Doing a first draft of a 10-page paper on the Babylonian economy. That draft is going to need human editing and fact-checking, but the amount of work required to create the finished paper will be much lower than if a person researched and wrote the entire thing from scratch.

For further information on this consult any college student or professor.

It seems pretty likely that first drafts of most types of academic and commercial writing are going to be done by AI in the relatively near future.

Whether that represents a 10x improvement is up to you, but it's going to disrupt a whole lot of jobs.

Machines can't think... same as bears can't wrestle.

Who wants to go in the ring?

Superior by what measure? If LLMs can produce a first draft that's 80% as good as a human at 10% the price, the LLMs are going to take over that market.Is there any evidence that such a workflow is superior to a human only workflow in any way by any amount?

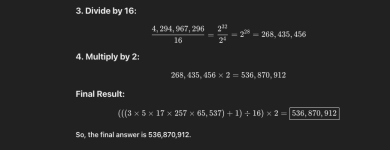

Nah, it just tried a different but similar number, just like when you write "are you sure?".I found it works to tell it to check its tokenization. This is from three weeks ago. Well, it sort of worked...

View attachment 90220

Programming is the thing I've tried again and again with ChatGPT, and I don't think I've ever got any correct piece of code... I guess it depends on the type of code.I feel like they'd be better off optimizing it for programming.

That's the one task that I routinely have success with and I can absolutely understand the monetary value of it.

Any other tasks I've tried with Generative AI tools tends to result in frustration. Times where it almost gets it right, but not quite. Or times where it worked yesterday, but not today.

Programming is also an area where you don't necessarily need it to be perfect. I'm thinking in terms of testing things out and not production level coding.

I’m pretty confident that if OpenAI just paid some guy five bucks to answer each time a “how many letters” query came up, they’d have saved a significant amount of money by now.I'm an 'AI' optimist. These techniques can do valuable things. You just have to make sure that you are measuring reasonably. If I set the AI a task, what percentage of the time will it be correct. How serious will the errors be? And then compare to your average human doing the same thing. People make errors too, all the AI has to be is better at these tasks than the average human for it to very quickly save companies money. The cost to run these models (vs train them) is nothing compared to the cost of paying for a human to sit in a chair and type. There are a very large number of currently human driven tasks that can be offloaded to this kind of AI.

By any measure. Total time to complete task, quality of end result, total cost of completing task.Superior by what measure? If LLMs can produce a first draft that's 80% as good as a human at 10% the price, the LLMs are going to take over that market.

LLMs are tools. Thinking about them as intelligences is a category error.

Now can it count how many s's in Mississippi?

How many "r"s are there in the word "strawberry"? Step through your reasoning.

To determine how many "r"s are in the word "strawberry," let's go through the word letter by letter:

Counting the "r"s we identified, we have:

- s - no "r"

- t - no "r"

- r - this is the first "r"

- a - no "r"

- w - no "r"

- b - no "r"

- e - no "r"

- r - this is the second "r"

- r - this is the third "r"

- y - no "r"

Thus, there are a total of 3 "r"s in the word "strawberry."

- The first "r" at position 3

- The second "r" at position 8

- The third "r" at position 9

<snip> I won't bore you with the iteration through every single letter of that sentence; suffice to say that it lists every letter in turn and counts them up correctly: </snip>Great! Using the same strategy, can you tell me how many "s"es there are in the sentence "Ars technica's posters who comment on LLM articles aren't always the most informed, and the discussion sometimes degrades into polemics"?

Certainly! Let's go through the sentence letter by letter to count the number of "s"es.

The sentence is:

"Ars technica's posters who comment on LLM articles aren't always the most informed, and the discussion sometimes degrades into polemics"

- A - no "s"

- r - no "s"

- s - this is the first "s"

Counting all the "s"es, we find there are a total of 14 "s"es in the sentence.

- p - no "s"

- o - no "s"

- l - no "s"

- e - no "s"

- m - no "s"

- i - no "s"

- c - no "s"

- s - this is the fourteenth "s"

perl -e '$_ = "Ars technica\'s posters who comment on LLM articles aren\'t always the most informed, and the discussion sometimes degrades into polemics"; print tr/s/s/,"\n"'. 14 is the correct answer.)I guess they get some points for being self aware with the naming at least. If anyone doesn't know the reference it's a pretty famous example of the problem with how token work:

View attachment 90214

I don't actually believe that. Do you have some documentation?The code name was months before anyone ran that problem.

I don't actually believe that. Do you have some documentation?

Believe it or not, the name Strawberry does not come from the “How many r’s are in strawberry” meme. We just chose a random word. As far as we know it was a complete coincidence.

The issue with counting r’s is not that people are using LLMs to count r’s, it’s that it’s really hard to predict ahead of time what LLMs will get wrong.I think one of the biggest problems with anthropomorphizing LLMs is the fact that we judge them by things that are trivial to humans, but make them seem dumb (strawberry rs that have been toted to death). Clearly the value of a new tool is not in the stuff it cannot do, but in the things it can do. Instead of focusing on performing human tasks that require human intelligence, focus should be placed on the things that an LLM is already doing 10x better than humans. They don't offer human intelligence, nor might they ever, although they offer an orthogonal kind of intelligence that can complement ours.

lol, in the "believe it or not" part, I definitely choose "not".

For people who don't click on tweets:

View attachment 90262

There are about a billion studies of LLMs out there. Have at it if you want statistics.By any measure. Total time to complete task, quality of end result, total cost of completing task.

Generally agreed, though they’d still need to include the caveat that it might get details wrong in the summary, and for THE LOVE OF GOD don’t rely on it for details of legal cases. Which reinforces your point if anything.Yeah if they just said "hey this is good for summarization and brainstorming, and certain professions like coders and lawyers/paralegals will see efficiency gains" I'd be far less of an jerk about their janky tech.

I don't know anything about the Tik Tok video, never saw it. The screenshot I posted is from June.I edited the post to add more context, the original Strawberry R count post on Tik-Tok that went viral was posted August 26th. Sam Altman's post of a picture of strawberries was August 7th.

I don't know anything about the Tik Tok video, never saw it. The screenshot I posted is from June.

https://community.openai.com/t/incorrect-count-of-r-characters-in-the-word-strawberry/829618

Sorry didn't mean to ships in the night.Yeah just found the bug report a little bit ago and updated as you were posting. So certainly conceivable that it was from the bug report/meme.

Hang on - the people who write papers on Babylonian history are almost entirely college students. And they aren’t paid to do it - they pay to do it.Superior by what measure? If LLMs can produce a first draft that's 80% as good as a human at 10% the price, the LLMs are going to take over that market.

LLMs are tools. Thinking about them as intelligences is a category error.

Sorry didn't mean to ships in the night.

Anyways, no big deal, but I don't know how they can claim it's random, who would believe that. Just lean into it.

I explicitly demanded analysis of the severity of the errors in the original post. There are loads of tasks for which AI already makes fewer, less consequential errors. And AI is likely to improve with further development.That’s only true if you assume all errors (and all patterns of errors) are equally bad.

Doing things right in the first place? Things are done as cheaply as possible in the first place and always will be. Up to now that's meant hiring entry-level employees to do first draft work and then having that edited by one or more layers of supervisors. No one's going to pay to get the first draft perfect or even very good. If LLMs can do it cheaper and even close to as good, they'll take over.Hang on - the people who write papers on Babylonian history are almost entirely college students. And they aren’t paid to do it - they pay to do it.

The product here is automated cheating on college assignments, which seems a pretty dicey business case for the valuation. Leaving moral qualms aside, there’s the risk universities wise up and move to alternate assessment methods just to stop people using your product.

My broader issue with the LLM business case is that it’s based on an assumption that fixing dodgy output is a better process than doing things right in the first place. There’s a lot of work in the business process sphere that says the opposite, and it’s well established in computer security that security should be built in rather than bolted on.

There's the very best kind of evidence: people choosing to use it instead of doing the work themselves. Surely if, for example, it made their lives harder rather than easier, they would not, so very very consistently, continue making this choice.Is there any evidence that such a workflow is superior to a human only workflow in any way by any amount?

There's the very best kind of evidence: people choosing to use it instead of doing the work themselves. Surely if, for example, it made their lives harder rather than easier, they would not, so very very consistently, continue making this choice.

So the real question is, given an arbitrary sequence of characters - or better yet, an arbitrary sequence of multi-letter tokens - can it still determine the correct occurrences of a given letter generally?

This will not fail:Fails if you intentionally misspell a word. It gets too excited to correct your spelling that it forgets its hardcoded letter counting.

View attachment 90232

strawberry.rs said:### System

You are a helpful assistant. You cannot count letters in a word by yourself because you see in tokens, not letters. Use thecount_letterstool to overcome this limitation.

### User

Count the number of r's in 'strawberry'

### Assistant

````json

{"type":"tool_use","id":"toolu_01RTeVZAot5dv6Hz7CAtzVQc","name":"count_letters","input":{"letter":"r","string":"strawberry"}}

````

### Tool

````json

{"type":"tool_result","tool_use_id":"toolu_01RTeVZAot5dv6Hz7CAtzVQc","content":"3","is_error":false}

````

### Assistant

The number of r's in 'strawberry' is 3.

It is the dumbest possible benchmark. It speaks far more to the ignorance of those using it than about the relative intelligence of any given model.So many people concerned with "how many r's in strawberry"

We believe that a hidden chain of thought presents a unique opportunity for monitoring models.

I literally wrote an app to do all the reading for people, and they still respond by not reading what I've written.you didn't invent the idea

A lot of people had the idea of having some sort of inner voice. Westworld is prior art and they cribbed it from this guy:I sent you guys a tip ages ago to indicate that I was working on this and nobody ever followed up.

A lot of the proposed work for LLMs rely on perverse incentives within workplaces. “Forced to write documents no-one will care about? Code that you’ll never need to debug or understand? Use LLMs to automate your useless busywork and code that should have been a template!” It’s a market, sure, but it’s not exactly how this stuff is being sold.Are they using it because it's quick and convenient or because the quality of the end result is better? We don't have that data.

Easier lines up with convenience more often than quality.

Now, doing things for convenience is perfectly fine, but then one needs to be clear about which dimension is being valued.