Researchers build a RISC-V processor using a 2D semiconductor

- Thread starter JournalBot

- Start date

I don't know what you're talking about. They were plane from what I read.Their claims seem a little thin.

Upvote

85

(88

/

-3)

jevandezande

Wise, Aged Ars Veteran

I'm not sure what this sentence is supposed to mean. We still have a bulk material (i.e. not a molecule), even if it is only a single layer. The bulk properties will be different than for 3D (e.g. phonon hydrodynamics in two-dimensional materials), but not non-existent, and thus can also effect the electronic properties.In any case, the electronic properties of these materials are strictly a product of the orbital configurations of the molecule itself—there is no bulk material from which bulk properties can emerge.

Upvote

21

(23

/

-2)

This is quite a technical achievement, and I’m sure the techniques involved will find applications.

There’s a slightly funny aspect—am I right to believe that this has a single molecule length… in the height of the chip? That was not the dimension we were obsessing over!

But IIRC the main interest in these lower-dimensional nano structures comes from the physics of the things—the Schrödinger wave equation has solutions that look quite different in 2-D or 1-D, right?

There’s a slightly funny aspect—am I right to believe that this has a single molecule length… in the height of the chip? That was not the dimension we were obsessing over!

But IIRC the main interest in these lower-dimensional nano structures comes from the physics of the things—the Schrödinger wave equation has solutions that look quite different in 2-D or 1-D, right?

Upvote

41

(42

/

-1)

hamjudo2000

Smack-Fu Master, in training

That is an amazing proof of concept. The performance will go up by orders magnitude once they figure out how to incorporate P channel mosfets into the circuits. I imagine they will be able to get plenty of funding to do that research.

Since I can't read the paper yet, I don't know anything about the wiring or the short term potential for stacking circuits. From this article, it appears we will see some amazing sample devices in a few years.

Since I can't read the paper yet, I don't know anything about the wiring or the short term potential for stacking circuits. From this article, it appears we will see some amazing sample devices in a few years.

Upvote

1

(7

/

-6)

I'm just imagining what a Beowulf cluster of these could accomplish....

Upvote

21

(23

/

-2)

If this process can be adapted to current silicon semi conductor fabs without too much problem... then it will become something really interesting. But I didn't see any comparison in terms of power/speed or temp limits, etc. So it's a neat proof of concept, but still needs alot more work.

Upvote

-2

(5

/

-7)

I also TIL another semi-related thing; I didn't know RISC-V 32-bit could be implemented with under 6K transistors. Native Linux executed on under 6K transistors. That's pretty cool.

Upvote

51

(52

/

-1)

Steak and potatoes

Seniorius Lurkius

Given that they are using a bit sequential RISC-V design, I wonder if they are using SERV or at least drawing inspiration from it. Also, if you are interested in how a 32bit processor can fit in so little logic, their documentation is pretty good, but very technical.

Upvote

33

(33

/

0)

David Mayer

Wise, Aged Ars Veteran

Your wordplay is falling flat.I don't know what you're talking about. They were plane from what I read.

Upvote

30

(31

/

-1)

David Mayer

Wise, Aged Ars Veteran

Upvote

57

(58

/

-1)

David Mayer

Wise, Aged Ars Veteran

Given enough memory it should be possible to run Linux on any turing machine, I did a bit of searching and there's no clear consensus on the number of transistors required to make such a machine, but the numbers range from single digits to a couple hundred.I also TIL another semi-related thing; I didn't know RISC-V 32-bit could be implemented with under 6K transistors. Native Linux executed on under 6K transistors. That's pretty cool.

Upvote

12

(12

/

0)

Oh yes, the Linux 4004 Project [Ars]! It was, interestingly, emulating RISC 32-bit instruction set to boot Linux in 4.7 days on 1970 silicon! I actually was aware so that's why I said "Native Linux" to disambiguate.Given enough memory it should be possible to run Linux on any turing machine, I did a bit of searching and there's no clear consensus on the number of transistors required to make such a machine, but the numbers range from single digits to a couple hundred.

Good to bring this up in feats in Turing Completeness. Also Turing related is Conway's Life running inside Conway's Life [YouTube], or the Javascript demo of Life running inside Life in real time, via GPU shaders [works on modern phones!] [minor cheat: It only emulates 1 zoom level deep, but with a sleight of programming-blend-to-reset, the zoom is infinite!].

What impresses me about how they optimized RISC-V -- is that the transistor-count difference of a 4004 (4-bit) and a simplified 32-bit RISC-V (32-bit) is only roughly 2.5x.

~2300 versus ~5900 transistors for 4-bit versus 32-bit native. I didn't know that was that tiny a difference.

Last edited:

Upvote

32

(32

/

0)

It could require many years of operation to get the first result, perhaps then we will know.I'm just imagining what a Beowulf cluster of these could accomplish....

Upvote

6

(7

/

-1)

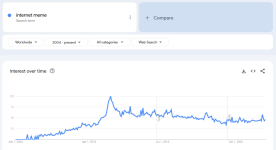

OT, but what happened to Graphene? It was getting hyped and then 3D printing or wearables became The Thing and we don't hear about it much.

Is it like aerogel, where it suddenly turns up somewhere quite mundane?

Is it like aerogel, where it suddenly turns up somewhere quite mundane?

Upvote

19

(19

/

0)

Since any 1 bit computer (even relay based) can emulate any word sized modern computer, it's really down to 1 bit microcode ROM size vs 1 bit hardware tradeoffs. Even 1 bit hardware can efficiently emulate 64 bit architectures using hardware and minimal microcode, but VERY slowly in either case. This is fundamental to all Turing complete machines.~2300 versus ~5900 transistors for 4-bit versus 32-bit native. I didn't know that was that tiny a difference.

Last edited:

Upvote

26

(26

/

0)

It seems much too small to me. I was thinking there had to be a mistake.I also TIL another semi-related thing; I didn't know RISC-V 32-bit could be implemented with under 6K transistors. Native Linux executed on under 6K transistors. That's pretty cool.

Upvote

-4

(0

/

-4)

The original RISC-I had something like 44K transistors [Wikipedia]!It seems much too small to me. I was thinking there had to be a mistake.

They didn't have the transistor-count/circuitry-reuse optimizing software in 1980s that now exists today.

Apparently, the article mentions simplifications such as calculating 32-bit registers 1-bit at a time, requiring 32 clock cycles for one addition. Other than that, elsewhere, I just read that they pulled of quite a few optimization feats to cram a 32-bit native instruction set in under 6K transitors.

I wonder how efficient this RISC-V CPU would be compared to a Z-80 (~8900 transistors) in silicon manufactured from a 1980s-era fab!

EDIT: Napkin exercise moment, checked some stats on some retro CPUs. Even 32 clock cycles for 32-bit math seems ginormously faster than a 6502 doing 32-bit addition (multiple hundred cycles), or even a Z80 (almost a hundred cycles). This does not consider memory vs register performance, as an unknown, though...

EDIT2: Better answer below. Both Z80 and 6502 can do it in well under 100. Upvote those posts instead!

Last edited:

Upvote

15

(15

/

0)

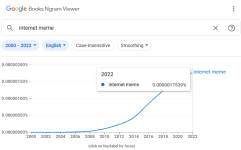

It's so old, it pre-dates calling things memes....

Upvote

12

(13

/

-1)

What is the benefit of this material/approach over “traditional” ones? The end of the article implies that at low clock speeds it is much lower power than silicon - is there any info available offering comparisons? I could see utility for (slow) ultra-low power devices like remote sensors, but my experience with such is that issues with access to the sensor data (power and other communications limitations) outweighs much of purported benefits (and we had very low power silicon based sensors).

Upvote

12

(12

/

0)

I guess if the sensor output is for local consumption (say for spacecraft navigation) that might be useful but I assume there are weird applications where a ultrathin sensor layer can be helpful that generates little heat like say between 2 chips stacked or in a display where thinness is the more important feature. But yeah remote sensors that then transmit the data sort of ruin the savings unless a summary can massively compress the data transmitted.What is the benefit of this material/approach over “traditional” ones? The end of the article implies that at low clock speeds it is much lower power than silicon - is there any info available offering comparisons? I could see utility for (slow) ultra-low power devices like remote sensors, but my experience with such is that issues with access to the sensor data (power and other communications limitations) outweighs much of purported benefits (and we had very low power silicon based sensors).

Upvote

7

(7

/

0)

Makes you wonder how it would run doesn't it? Just from a mfg point of view kind of amazing isn't it?I also TIL another semi-related thing; I didn't know RISC-V 32-bit could be implemented with under 6K transistors. Native Linux executed on under 6K transistors. That's pretty cool.

Upvote

1

(2

/

-1)

Andrew Wade

Ars Centurion

Yeah that doesn't sound right, the register file alone would eat up that budget. I don't particularly want to pay for the paper but I think this is a clue: "This also required on-board buffers to store the intermediate results." My guess is that they've moved a lot off-chip that would normally be on-chip even in early microprocessors.It seems much too small to me. I was thinking there had to be a mistake.

Upvote

6

(6

/

0)

EDIT: Napkin exercise moment, checked some stats on some retro CPUs. Even 32 clock cycles for 32-bit math seems ginormously faster than a 6502 doing 32-bit addition (multiple hundred cycles), or even a Z80 (almost a hundred cycles). This does not consider memory vs register performance, as an unknown, though...

Code:

; 6502 32 bit addition

;

ADD32 CLC ; 2 cycles

LDA A+0 ; 4 cycles

ADC B+0 ; 4 cycles

STA C+0 ; 4 cycles

LDA A+1 ; 4 cycles

ADC B+1 ; 4 cycles

STA C+1 ; 4 cycles

LDA A+2 ; 4 cycles

ADC B+2 ; 4 cycles

STA C+2 ; 4 cycles

LDA A+3 ; 4 cycles

ADC B+3 ; 4 cycles

STA C+3 ; 4 cycles

RTS ; 6 cycles

; 56 total

; Shorter, but slower

;

ADD32 LDX #$FC ; 2 cycles

LOOP LDA A-$FC,X ; 4*4 cycles

ADC B-$FC,X ; 4*4 cycles

STA C-$FC,X ; 4*4 cycles

INX ; 4*2 cycles

BNE LOOP ; 3*3 + 2 cycles

RTS ; 6 cycles

; 75 total

A DS 4

B DS 4

C DS 4

Upvote

21

(21

/

0)

I also TIL another semi-related thing; I didn't know RISC-V 32-bit could be implemented with under 6K transistors. Native Linux executed on under 6K transistors. That's pretty cool.

RISC-V is a whole family - the barest minimal RISC-V (which this would be) is without FP, memory management and a whole raft of things - the sort of thing you'd stick in a very embedded device. So no Linux on that. There's specific RISC-V configurations for Linux.

Upvote

8

(8

/

0)

The original RISC-I had something like 44K transistors [Wikipedia]!

They didn't have the transistor-count/circuitry-reuse optimizing software in 1980s that now exists today.

Apparently, the article mentions simplifications such as calculating 32-bit registers 1-bit at a time, requiring 32 clock cycles for one addition. Other than that, elsewhere, I just read that they pulled of quite a few optimization feats to cram a 32-bit native instruction set in under 6K transitors.

I wonder how efficient this RISC-V CPU would be compared to a Z-80 (~8900 transistors) in silicon manufactured from a 1980s-era fab!

EDIT: Napkin exercise moment, checked some stats on some retro CPUs. Even 32 clock cycles for 32-bit math seems ginormously faster than a 6502 doing 32-bit addition (multiple hundred cycles), or even a Z80 (almost a hundred cycles). This does not consider memory vs register performance, as an unknown, though...

100 cycles for a 32 bit addition on a Z80? What on earth are you doing?

ADD IX,BC

ADC HL,DE

30 cycles I think.

Edit: oh, and a adder of any size can be chained from smaller ones. A 1 bit adder has 3 inputs (two incoming + carry), and 2 outputs (output and carry). Chain the carry-out to the carry-in of the next one in hardware (or do it in software with ADC instructions...), rinse and repeat. It's as slow as it takes to ripple through.

That's how the 68000 was doing 32 bit arithmetic even though it only had 3 x16-bit adders... two of them were directly ganged to make a 32-bit address generation unit, and microcode did the work for the 32 bit operations on the 16 bit ALU. So addresses were done fast, but arithmetic took twice as long as you'd expect of a "32 bit" CPU. The 16-bit ALU was why there were limits on the 32 bit operations.

All fixed in the 68020 onwards of course.

Last edited:

Upvote

16

(16

/

0)

I thought ADC only exists for 8-bit, you'd need ADD without carry if you combine two 8-bit registers into one 16-bit register. I thought Z80 didn't have automatic carries between consecutive 16-bit ADD's.ADC HL,DE

But I am now rethinking, even with corrections, I think you can do it in under 50 cycles, just not 25-30. So not much slower.

My memory is foggy about Z80's capabilities and limitations. Decades ago, I've mainly done assembly on 6502's which didn't have this convenience of combining two 8-bit registers into a 16-bit register. The 6502 only conveniently have A,X,Y all 8-bit and you'd have the hit of accessing memory for 32-bit. So 8 memory accesses.

[6502 code]

Yes. Even before you posted, I re-evaluated and you can do add's of two 32-bit memory values (8 bytes read, 4 bytes write) on 6502 on less than 100 cycles, even with the cost of memory accesses. That's consistent with your loop and unrolled versions. So yeah, not much of a performance advantage after all.

I think I incorrectly remembered based on 32-bit floats (doing those IEEE 754's are still gunna take you low hundreds of cycles on a 6502), not integers. I have hereby been self-corrected.

Yeah, the extra transistors on those old processors do speed up 8-bit and 16-bit ops a fair bit.

That RISC-V in under 6K transistors may not be much of an advantage at the same clock frequency after all. Still, the article's RISC-V implementation is a great transistor-count optimization, that's a 32-bit CPU with fewer transistors than a Z80.

Last edited:

Upvote

2

(2

/

0)

I thought ADC only exists for 8-bit, you'd need ADD without carry if you combine two 8-bit registers into one 16-bit register. I thought Z80 didn't have automatic carries between consecutive 16-bit ADD's.

But I am now rethinking, even with corrections, I think you can do it in under 50 cycles, just not 25-30. So not much slower.

My memory is foggy about Z80's capabilities and limitations. Decades ago, I've mainly done assembly on 6502's which didn't have this convenience of combining two 8-bit registers into a 16-bit register. The 6502 only conveniently have A,X,Y all 8-bit and you'd have the hit of accessing memory for 32-bit. So 8 memory accesses.

Yes. Even before you posted, I re-evaluated and you can do add's of two 32-bit memory values (8 bytes read, 4 bytes write) on 6502 on less than 100 cycles, even with the cost of memory accesses. That's consistent with your loop and unrolled versions. So yeah, not much of a performance advantage after all.

I think I incorrectly remembered based on 32-bit floats (doing those IEEE 754's are still gunna take you low hundreds of cycles on a 6502), not integers. I have hereby been self-corrected.

Yeah, the extra transistors on those old processors do speed up 8-bit and 16-bit ops a fair bit.

That RISC-V in under 6K transistors may not be much of an advantage at the same clock frequency after all. Still, the article's RISC-V implementation is a great transistor-count optimization, that's a 32-bit CPU with fewer transistors than a Z80.

Err.... I literally posted 30 cycles on the Z80.

ADD IX,BC -> fairly standard (you can do lots of the HL ops on IX/IY though not the ED instructions) - 15 cycles.

ADC HL,DE -> look! 16 bit ADC! in the ED instructions, so HL only, not IX/IY. There's no 16 bit SUB, but that's ok, there's a 16 bit SBC and you can clear carry with a single instruction readily enough (AND A, OR A...)... 15 cycles.

it's all done using the 8 bit ALU of course, but using the register pairs (IX/IY are also register pairs, but they're not documented openly. But there's no problems accessing IXL, IXH, IYL, IYH if you really want to)

Edit: both of those instructions are in the Z80 superset, not the original 8080 set. The 8080 only had basic 16 bit ADD. Not ADC, not SBC. If you wanted more than 16 bit ADD you needed to string ADC A/SBC A along (well the 8080 version of those mnemonics)

If you want to do it byte by byte in memory...

LD B,4 ; or 8... or more...

OR A

@@:

LD A,(DE) ; 7*n

ADC A,(HL) ; 7*n

LD (HL),A ; or (DE)? whichever. 7*n

INC DE ; 6*n

INC HL ; 6*n

DJNZ @B ; 13*n-5 (the last doesn't jump)

Not a particularly efficient way, but flexible.

LD HL,(x) ; 16

LD DE, ( y ) ; 20

ADD HL,DE; 11

LD (x),HL; 16

LD HL,(x+2); 16

LD DE,(y+2) ; 20

ADC HL,DE; 15

LD (x+2),HL; 16

There's the add part of it in 26 cycles. Cheating a bit because by using HL and DE for all the work I get to leverage the cheaper 8080 set opcodes like LD HL,(nn), ADD HL,DE... those extra cycles are fetching the longer Z80 instructions in the ED prefixes. I saved 4 cycles there, but I'm limited in how I fetch it from memory and store it back. The only "cheap" Z80 specific instructions are the ones that fit around the 8080 instructions in the original table. Considering a quarter of the table is just register to register moves, there weren't as many as you'd want... the whole JR stuff could only use 4 condition codes instead of the full 8 because of it.

Did I spend too much time doing Z80 assembly? Why, yes, yes I did.

Last edited:

Upvote

20

(20

/

0)

Had to pull the extended Z80 manual -- I stand corrected (Page 190). Thank you!ADD IX,BC -> fairly standard (you can do lots of the HL ops on IX/IY though not the ED instructions) - 15 cycles.

That said, I believe it might not have been officially documented in the dusty 1976 manual, though. It must have worked anyway; undocumented-became-documented opcode, and documentation got updated a few years after; there's lots of old documentation missing lots of info from the early days. Whatever the reason, I stand corrected.

Last edited:

Upvote

5

(5

/

0)

(OT) This caused acute flashbacks to the appalling Ferranti F-100L processor that was supposed to be used in UK military applications.

I don't know if he was the lead, but there was a UK engineer named Ivor Catt who had the idea that a bit-sequential CPU design could be made very fast and so be as fast as, smaller, and use less power than a parallel design. His idea was that you'd be using ECL at 500MHz versus the TTL of the era at around 10MHz.

The result was something that was slower than an 8 bit CMOS microcontroller as it did addition one bit at a time at 16MHz.

I think even Ferranti knew it was a lemon.

I don't know if he was the lead, but there was a UK engineer named Ivor Catt who had the idea that a bit-sequential CPU design could be made very fast and so be as fast as, smaller, and use less power than a parallel design. His idea was that you'd be using ECL at 500MHz versus the TTL of the era at around 10MHz.

The result was something that was slower than an 8 bit CMOS microcontroller as it did addition one bit at a time at 16MHz.

I think even Ferranti knew it was a lemon.

Upvote

3

(4

/

-1)

It matches the Nature article. DOIs can often take days to become active.The DOI link seems broken.

Upvote

6

(6

/

0)

Super-capacitors are in production (US company called Skeleton makes them), I remember a UK company is using graphene to make some sort of sensor (details escape me), it's still expensive but it has found some nichesOT, but what happened to Graphene? It was getting hyped and then 3D printing or wearables became The Thing and we don't hear about it much.

Is it like aerogel, where it suddenly turns up somewhere quite mundane?

Upvote

2

(2

/

0)

Goes back further than that. WIRED magazine were throwing around the term in the early 90’s. Ref: vol 2.02 Feb 1994 p71 “memegraphics”, vol 3.12 Dec 1995 “meme”.

I remember a whole article on the concept but I didn’t find. Hopefully it wasn’t in vol 1.3 that is still borrowed. In my head it is right next to the article on smart drugs with Timothy Leary, I didn’t spot that one neither.

Upvote

0

(1

/

-1)

tired_luddite

Smack-Fu Master, in training

Wow thermal paste, we are living in the future! I was curious where they use graphene and every reference is "could be used as" and "research shows". The commercial adoptation is very mundane, no space lifts or cures for cancer, it's more like slightly better hull coatings.

Upvote

1

(2

/

-1)

WIRED magazine vol 2.02 Feb 1994 p71 “Memegraphics”It's so old, it pre-dates calling things memes....

Upvote

1

(1

/

0)