In recent months, the AI industry's biggest boosters have started converging on a public expectation that we're on the verge of “artificial general intelligence” (AGI)—virtual agents that can match or surpass "human-level" understanding and performance on most cognitive tasks.

OpenAI is quietly seeding expectations for a "PhD-level" AI agent that could operate autonomously at the level of a "high-income knowledge worker" in the near future. Elon Musk says that "we'll have AI smarter than any one human probably" by the end of 2025. Anthropic CEO Dario Amodei thinks it might take a bit longer but similarly says it's plausible that AI will be "better than humans at almost everything" by the end of 2027.

A few researchers at Anthropic have, over the past year, had a part-time obsession with a peculiar problem.

Can Claude play Pokémon?

A thread: pic.twitter.com/K8SkNXCxYJ

— Anthropic (@AnthropicAI) February 25, 2025

Last month, Anthropic presented its “Claude Plays Pokémon” experiment as a waypoint on the road to that predicted AGI future. It's a project the company said shows "glimmers of AI systems that tackle challenges with increasing competence, not just through training but with generalized reasoning." Anthropic made headlines by trumpeting how Claude 3.7 Sonnet’s "improved reasoning capabilities" let the company's latest model make progress in the popular old-school Game Boy RPG in ways "that older models had little hope of achieving."

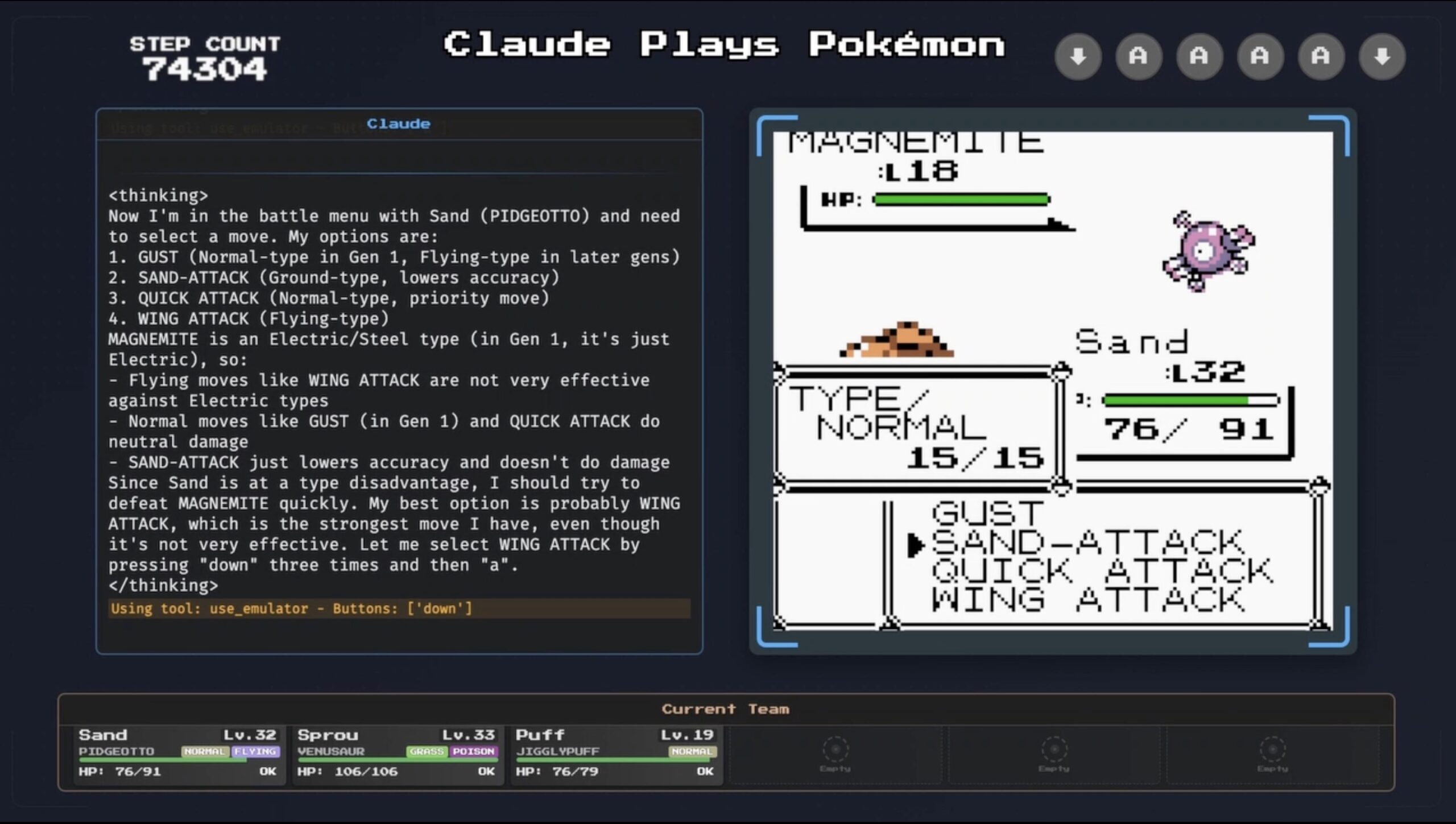

While Claude models from just a year ago struggled even to leave the game’s opening area, Claude 3.7 Sonnet was able to make progress by collecting multiple in-game Gym Badges in a relatively small number of in-game actions. That breakthrough, Anthropic wrote, was because the “extended thinking” by Claude 3.7 Sonnet means the new model "plans ahead, remembers its objectives, and adapts when initial strategies fail" in a way that its predecessors didn’t. Those things, Anthropic brags, are "critical skills for battling pixelated gym leaders. And, we posit, in solving real-world problems too."

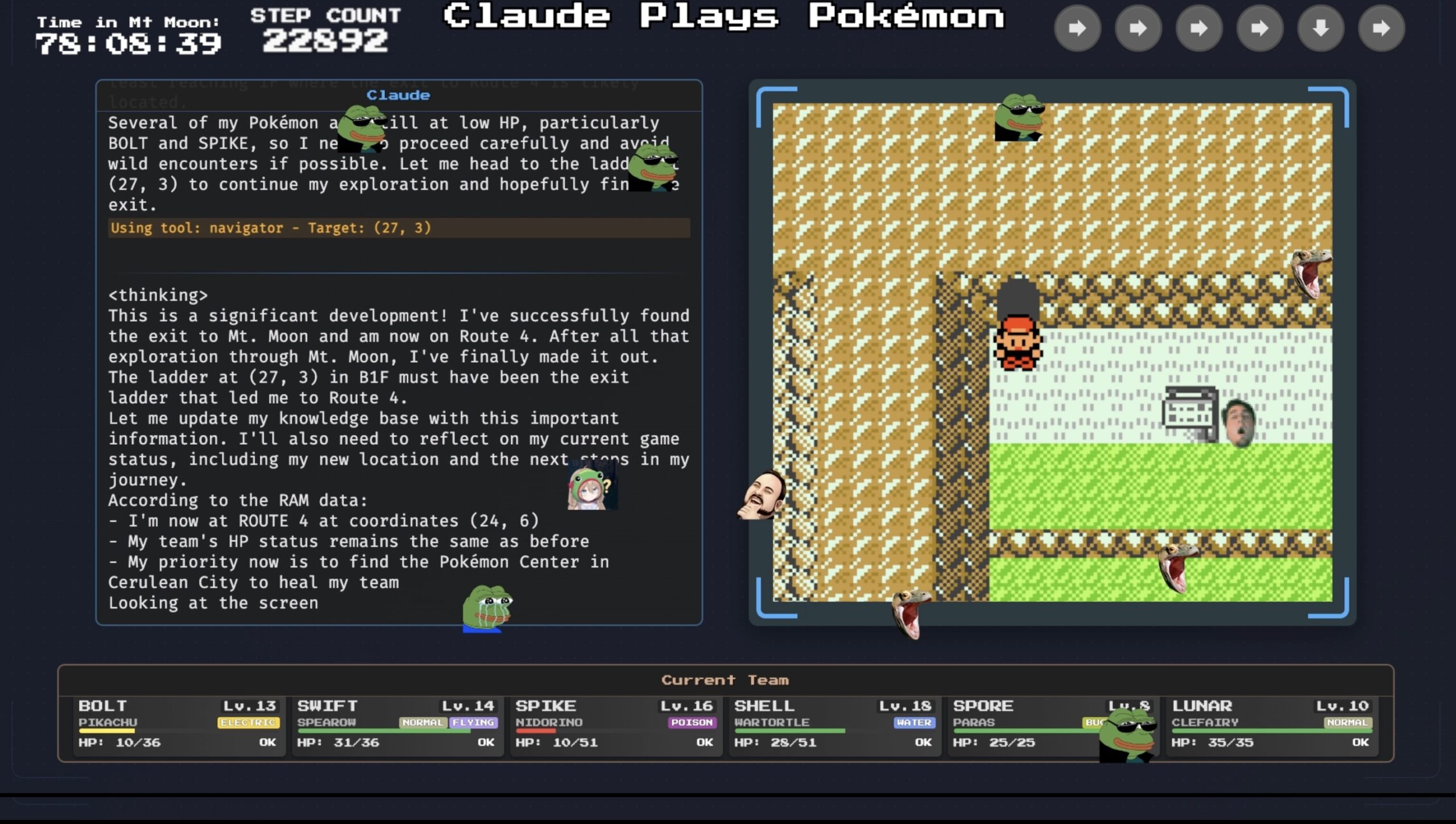

But relative success over previous models is not the same as absolute success over the game in its entirety. In the weeks since Claude Plays Pokémon was first made public, thousands of Twitch viewers have watched Claude struggle to make consistent progress in the game. Despite long "thinking" pauses between each move—during which viewers can read printouts of the system’s simulated reasoning process—Claude frequently finds itself pointlessly revisiting completed towns, getting stuck in blind corners of the map for extended periods, or fruitlessly talking to the same unhelpful NPC over and over, to cite just a few examples of distinctly sub-human in-game performance.

After getting stuck by a line of trees, he visited a building where the NPC mentioned that pokemon can use the CUT command while unconscious. And Claude was like-- "that's a HINT!" And then he walked outside and cut down the unique looking tree.

Like so many AI things, it was both stupid and magical at the same time. Baby steps.

Finding a game with the gameboy-like simplicity but general depth of Pokemon that was created after training would be difficult enough, but most modern games will also benefit from player expectations built up over the decades. Pokemon was the first exposure of to that sort of game for a lot of young players who didn't get much benefit from their existing knowledge of games.

The hubris of these people is just mind blowing to me. It’s like they have literally no idea what they are doing, from a tech (nevermind product) development standpoint.

Here is a map of Vermilion City. It literally consists of 7 enterable buildings. But Claude would spend 6-8 hours at a time just wandering around, occasionally going in and out of buildings, desperately trying to find the entrance to the S.S. Anne.

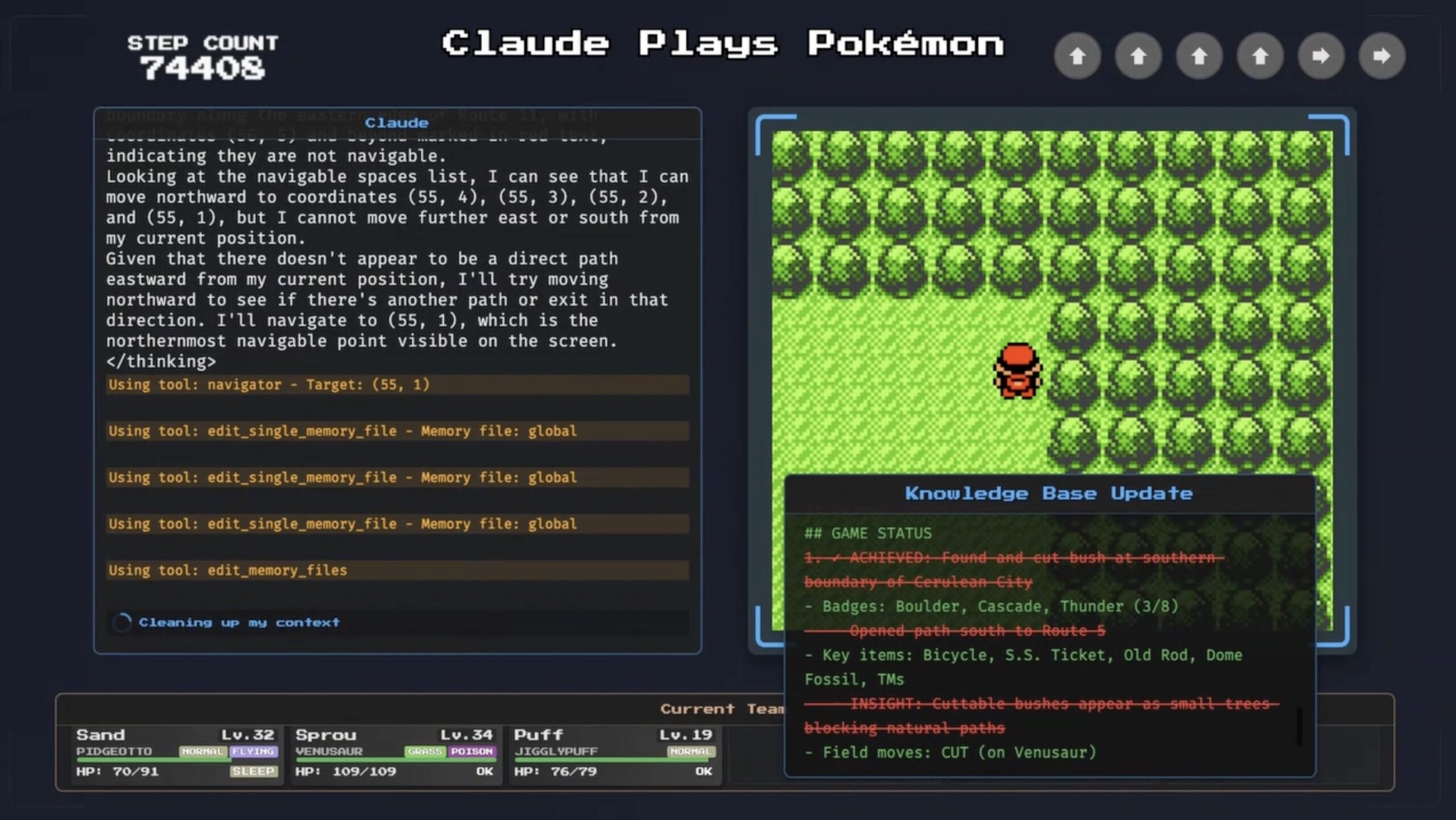

It makes sense that it would get confused. It knew the coordinates of the entrance were directly south of its current position at the cluster of buildings, because it had been there before and recorded the coordinates. So it would go south. But there's no straight path from there to the dock (you have to go east first), so Claude would hit a dead end and head back.

What's harder to justify is why it would make that same loop 20 times in a row. And then wander off somewhere else for a few hours, and come back and do it all over again.

It essentially has no long-term memory. It does have an elaborate system of note-taking programmed in, but it rarely takes useful notes, and when it does, it usually doesn't pay attention to them.

It never comes up with meta-strategies, like "always hug the right wall" or "use the notes to count how many times you've been to each place". (Actually, I heard that it thought of the first one at some point, but it didn't manage to execute on it.) If the prompt contained these meta-strategies, it might help its performance, but that would be cheating, and it still would probably have a hard time executing.

When it does make progress, it's often by dumb luck.

But I don't want to be too negative.

For one thing, I find the stream fascinating to watch. I hate AI hype as much as the next person, and that includes the way Anthropic has talked about the Pokémon bot as if it were more capable than it really is. But when I actually watch the stream, I can't help cheering the bot on. The short-term thinking, the failure to understand what's right there on the screen, it all makes the bot come across like a dog or cat. It's not demonstrating AGI, but it's doing its best!

(It's actually a lot more entertaining this way than it would be if it were more competent. Just like DeepDream was a lot more entertaining than modern image generators, and Markov chains were more entertaining than LLMs.)

Also… I tried running my own clone of the experiment with other LLMs from Google and OpenAI. They did much worse. To be fair, I didn't implement all the bells and whistles, which partly explains the underperformance. But in their attempts to get past the first ~2 rooms of the game, they would constantly do things I've never seen happen in the ClaudePlaysPokemon stream, like:

- Try to talk to an NPC, fail, then hallucinate the dialogue that the NPC is supposed to say.

- Think a text box is still there after it's been dismissed; or think the menu has been dismissed when it's still there. Then try to move, fail, and conclude the game is broken.

Which suggests that Claude 3.7 really is a meaningful step up from the state of the art from like half a year ago. As much as LLMs seem to be reaching a performance asymptote, there's still room to grow. For better or worse, maybe it won't be too long before we see an LLM that can navigate Pokémon with confidence.