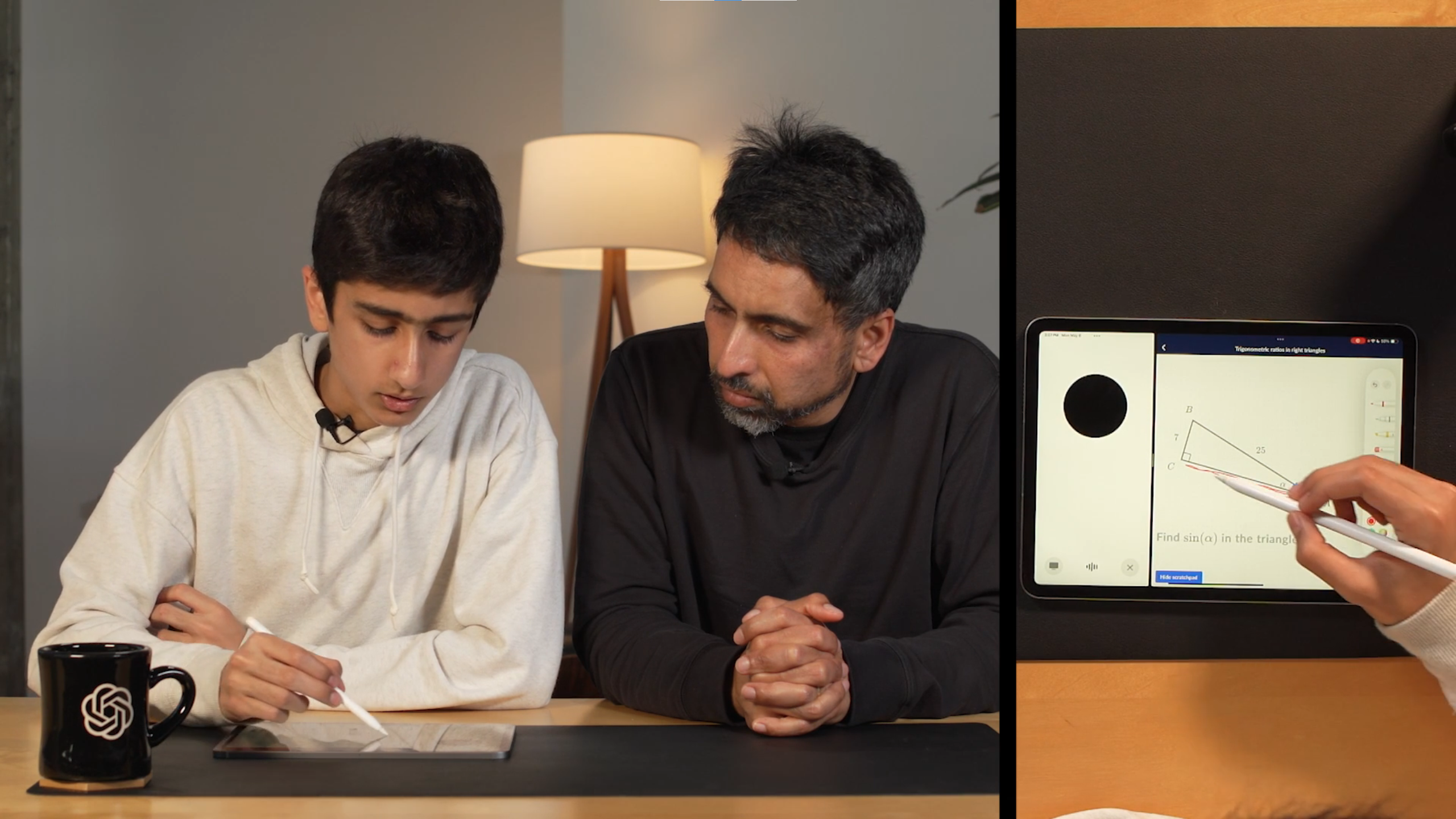

At this point, anyone with even a passing interest in AI is very familiar with the process of typing out messages to a chatbot and getting back long streams of text in response. Today's announcement of ChatGPT-4o—which lets users converse with a chatbot using real-time audio and video—might seem like a mere lateral evolution of that basic interaction model.

After looking through over a dozen video demos OpenAI posted alongside today's announcement, though, I think we're on the verge of something more like a sea change in how we think of and work with large language models. While we don't yet have access to ChatGPT-4o's audio-visual features ourselves, the important non-verbal cues on display here—both from GPT-4o and from the users—make the chatbot instantly feel much more human. And I'm not sure the average user is fully ready for how they might feel about that.

It thinks it’s people

Take this video, where a newly expectant father looks to ChatGPT-4o for an opinion on a dad joke ("What do you call a giant pile of kittens? A meow-ntain!"). The old ChatGPT4 could easily type out the same responses of "Congrats on the upcoming addition to your family!" and "That's perfectly hilarious. Definitely a top-tier dad joke." But there's much more impact to hearing GPT-4o give that same information in the video, complete with the gentle laughter and rising and falling vocal intonations of a lifelong friend.

Or look at this video, where GPT-4o finds itself reacting to images of an adorable white dog. The AI assistant immediately dips into that high-pitched, baby-talk-ish vocal register that will be instantly familiar to anyone who has encountered a cute pet for the first time. It's a convincing demonstration of what xkcd's Randall Munroe famously identified as the "You're a kitty!" effect, and it goes a long way to convincing you that GPT-4o, too, is just like people.

Loading comments...

Loading comments...