On November 22, a few days after OpenAI fired (and then re-hired) CEO Sam Altman, The Information reported that OpenAI had made a technical breakthrough that would allow it to “develop far more powerful artificial intelligence models.” Dubbed Q* (and pronounced “Q star”) the new model was “able to solve math problems that it hadn’t seen before.”

Reuters published a similar story, but details were vague.

Both outlets linked this supposed breakthrough to the board’s decision to fire Altman. Reuters reported that several OpenAI staffers sent the board a letter “warning of a powerful artificial intelligence discovery that they said could threaten humanity.” However, “Reuters was unable to review a copy of the letter,” and subsequent reporting hasn’t connected Altman’s firing to concerns over Q*.

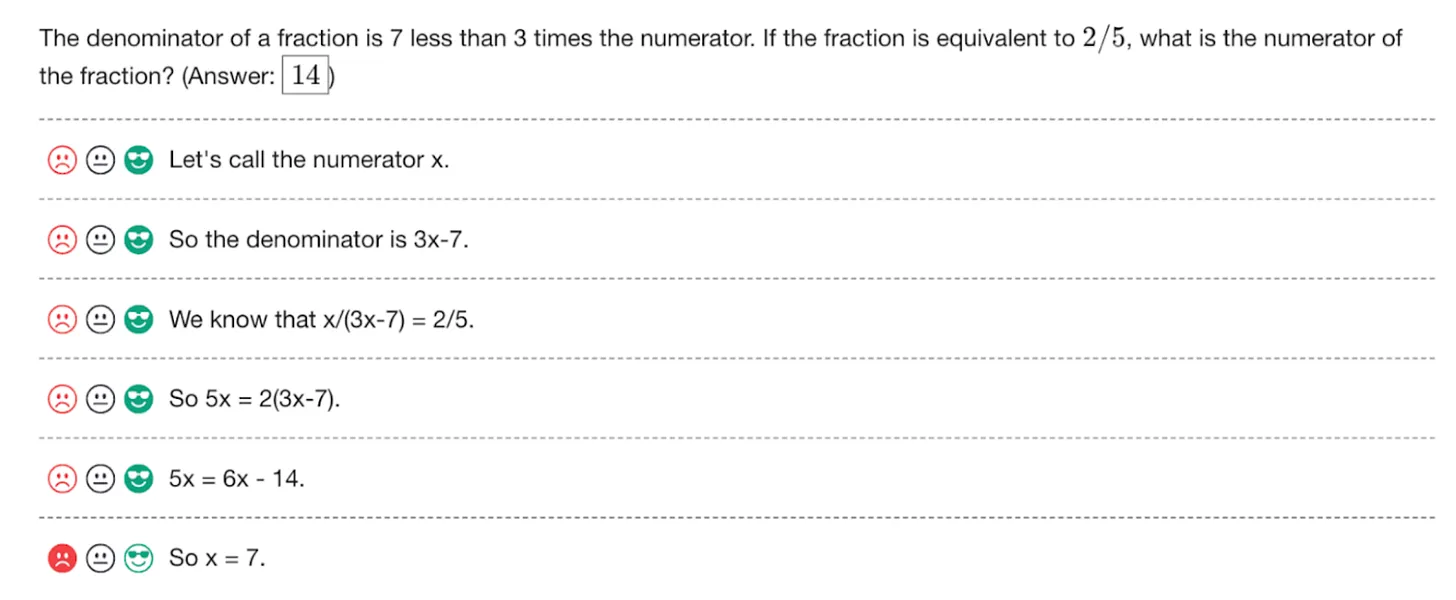

The Information reported that earlier this year, OpenAI built “systems that could solve basic math problems, a difficult task for existing AI models.” Reuters described Q* as “performing math on the level of grade-school students.”

Instead of immediately leaping in with speculation, I decided to take a few days to do some reading. OpenAI hasn’t published details on its supposed Q* breakthrough, but it has published two papers about its efforts to solve grade-school math problems. And a number of researchers outside of OpenAI—including at Google’s DeepMind—have been doing important work in this area.

I’m skeptical that Q*—whatever it is—is the crucial breakthrough that will lead to artificial general intelligence. I certainly don’t think it’s a threat to humanity. But it might be an important step toward an AI with general reasoning abilities.

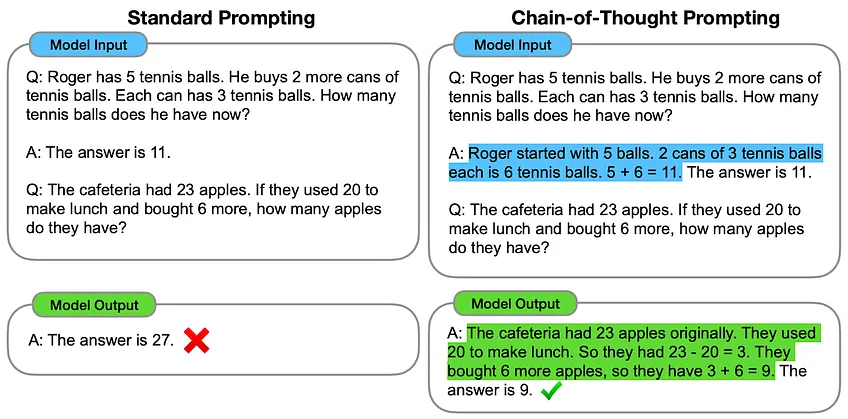

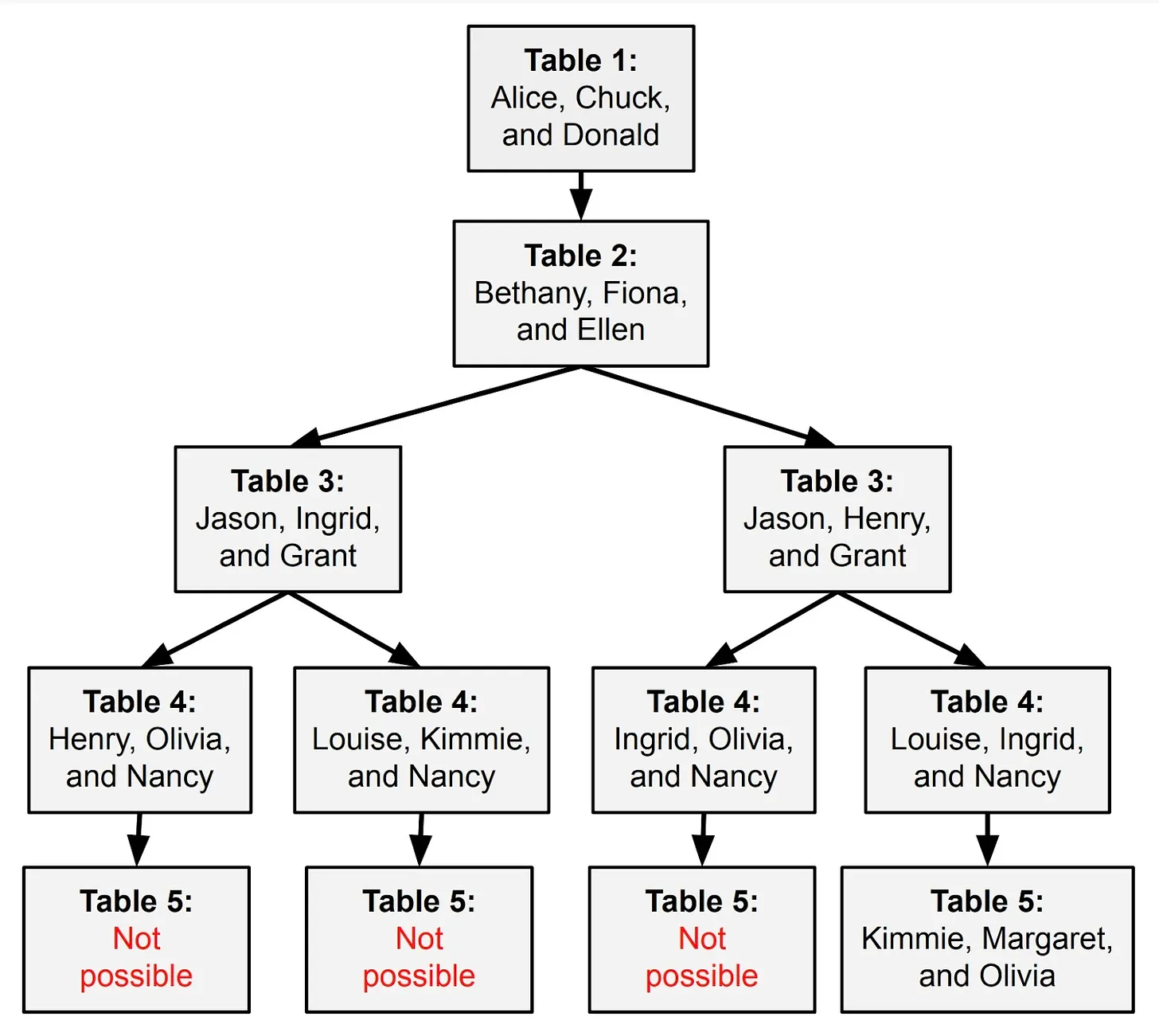

In this piece, I’ll offer a guided tour of this important area of AI research and explain why step-by-step reasoning techniques designed for math problems could have much broader applications.

Loading comments...

Loading comments...